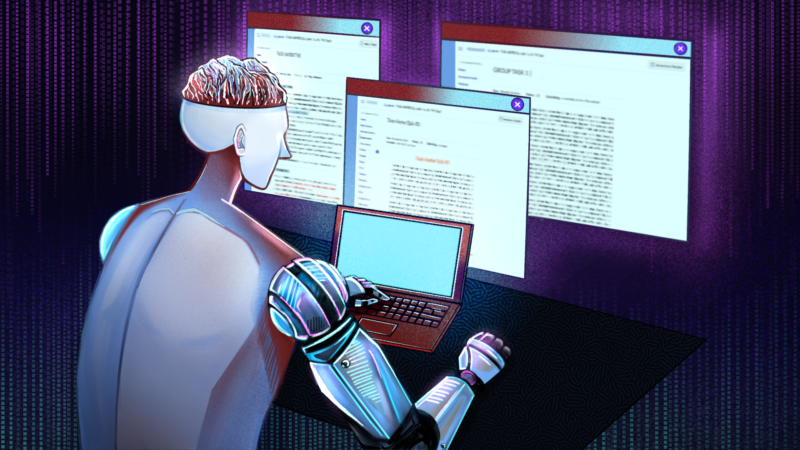

IN RECENT weeks, the widespread trend of Artificial Intelligence (AI) generating scholastic works has sparked concern among educators regarding the future of critical thinking and creativity.

As the integration of AI in academia challenges conventional teaching approaches, educators are left puzzled with finding proper ways to adopt the evolving technology.

Until then, ethical concerns abound with the increasing use of AI in the academic setting, with students, for example, now having more tools to commit plagiarism. Unfortunately, this academic dishonesty undermines the integrity of the educational system at large, posing long-term negative consequences for students and faculty alike.

Machine catalysts

As AI continues to evolve, AI bots also take one step ahead in analyzing large datasets of existing content. Moreover, they learn patterns and structures to generate new content that is similar to the original work in style and tone, making AI-generated work seem like fresh content made by humans.

One of the various techniques that AI utilizes is Natural Language Processing (NLP), which combines linguistics, computer science, and artificial intelligence to analyze and manipulate human language using algorithms.

Machine Learning (ML), another agent of AI, allows computers to continuously improve through trial and error. AI bots analyze large datasets using ML algorithms and are commonly used in chatbots and language translation applications.

Deep Learning (DL), on the other hand, is a subfield of ML that focuses on building complex neural networks. DL techniques are used in massive datasets of texts and are often used in language generation models and speech recognition tools.

ChatGPT, which employs a combination of all these techniques, has taken the internet by storm, as the chatbot can write stories and essays, solve mathematical problems, and do code. All these functions are useful for students to cheat in school.

While AI detectors can be effective in identifying AI-generated content, they are not foolproof. False positives can occur when human-generated content is mistakenly flagged as machine-generated. This can have serious consequences for individuals, such as being accused of plagiarism or academic dishonesty.

Two heads

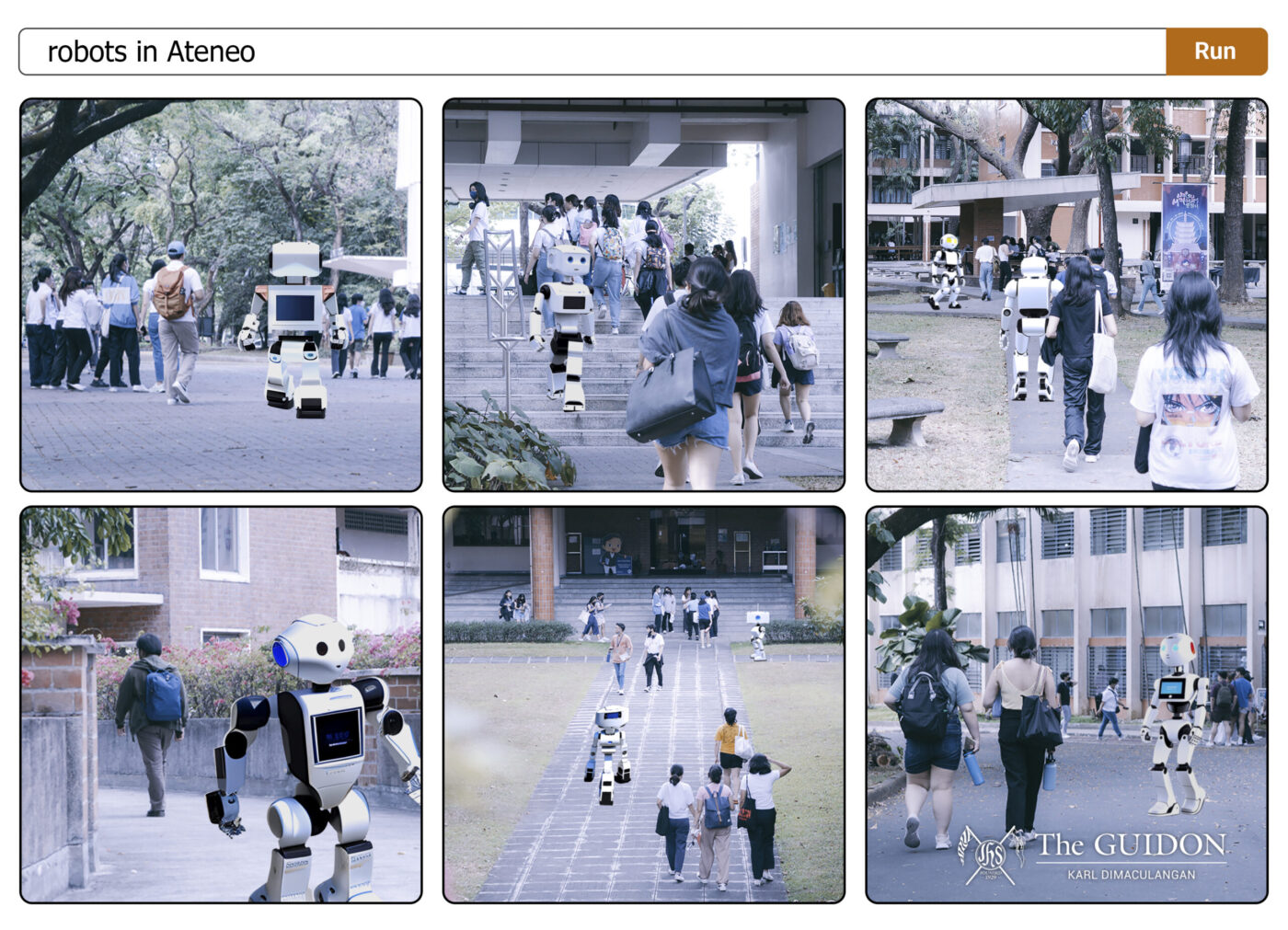

The same concern exists well within the Loyola Schools (LS).

Gabrielle Anne Uy (2 BS ITE) had questions regarding data privacy and plagiarism when talking about AI in the academe. However, her stance changed after learning about its purpose as a technology that can be used to “aid rather than replace humans’ work.”

Similarly, Project Leader of Ateneo Laboratory for the Learning Sciences Ma. Mercedes T. Rodrigo also advertises the use of AI for personalized learning experiences.

“If you are [to write] a research paper and you don’t know where to begin, you can actually post a question to something like ChatGPT and it will give you some ideas, but it’s up to you to develop them,” she shares.

However, she also acknowledges the limitations with the use of AI. She asserts, “We have to be very, very clear about what’s acceptable use and what is not acceptable. […] I would encourage my thesis students, for example, to use it if only to fix grammar and style but not to actually write anything.”

Parsing pedagogy

ChatGPT, along with other AI models, provides students with convenience given its AI ability to quickly find and gather useful information, indicating its potential as a valuable learning tool.

In fact, John Gokongwei School of Management Information Technology Entrepreneurship Program Director Joseph Ilagan has come to recognize the place of AI in the academe.

“The teacher and students may use AI in the creation of course content and the submission of assignments. The teacher will disclose the use of AI in relevant areas of course content creation,” he indicates in his course syllabus.

In one instance, he tasked his students to use ValidatorAI to get feedback for their startup ideas. Ilagan also creates course content with the help of AI transcription and grammar checking tools.

While AI use will not be considered cheating, Ilagan says that he reserves the right to deduct points from a student’s grade if they use it without putting in any effort in the output.

With this, various stakeholders in the technology industry also call for the ethical use of AI within and outside academia.

Machine for machinations

While optimistic for the future of AI, Rodrigo fears that student misuse of AI may undermine their learning. With this, she emphasizes the need to set limitations on AI use.

Now, as AI became more sophisticated, it has found use in activities more sinister than computer-assisted plagiarism. Thus, the question of how morality can be ensured with AI use continues to baffle AI experts and educators.

Although the ethics of AI remains debatable, hardwiring these moral considerations into users themselves is a different story. As such, the challenge is for AI users to keep human morality in control of a rapidly automating world.