BENEATH THE political agitation associated with disinformation lies a complex web of networks pushing forth false narratives amid weakly regulated social media algorithms. As the public increasingly begins to scrutinize disinformation, advocacy groups and the press have constantly emphasized the culpability of the state and big tech groups. However, disinformation actors in the local scene continue to operate in a highly commercialized manner due to huge market demand—alluding to a thriving industry of disinformation.

With Filipinos now more reliant than ever on social media as their source of information, further understanding disinformation tactics is crucial in preventing netizens from helping circulate the falsehoods they see online.

Deploying disinformation

Social media has become a potent area for disinformation with politicians such as the Marcoses deliberately weaponizing it for personal gain. Dissecting or identifying disinformation across social media is difficult because it coexists with news, opinion, and entertainment, according to University of the Philippines Diliman Communication Research Assistant Professor Fatima Gaw.

Gaw explained that the difficulty stems from computational propaganda wherein disinformation actors strategically deploy bots and trolls. “Bots are programmed accounts online that can facilitate massive or organized inauthentic actions,” she said, illustrating how the movement of these bots make certain topics trend.

On the other hand, Gaw described trolls as paid individuals who use inauthentic accounts to manufacture discourse and flood opposing opinions on news articles through very hostile discourse rather than with rational arguments.

Trolls and bots have long been utilized by candidates and government officials to manipulate public opinion during events such as the 2016 Philippine elections. President Rodrigo Duterte even admitted to paying social media users and groups to spread propaganda and disinformation during his campaign.

More than a product of paid propaganda, the spread of disinformation is also due to the algorithms of many digital platforms. Gaw noted that commercial platforms are not necessarily made for users of various backgrounds to converge for political debates.

“Their political [and] economic character as a commercial [technology] platform—that denies their media or editorial responsibility—is one of the reasons why disinformation [permeates] these platforms,” she said, pointing to the platforms’ lack of accountability in moderating circulating and popular content.

While algorithms are necessary for platforms to function efficiently due to the sheer amount of content being uploaded at a time, Gaw noted there must be mechanisms in place to prevent actors from manipulating them.

“[Algorithms are] not necessarily the enemy here. They’re mechanisms to govern [social media content]. But how the algorithm works—the logic that fuels their decision-making—is what [makes it] problematic,” Gaw explained.

A whole industry

Beyond algorithms, trolls, and bots, disinformation also involves various actors engaged in a shrouded yet organized industry. University of Massachusetts Amherst Global Digital Media Associate Professor Jonathan Corpus Ong, PhD stated that digital disinformation is merely an expansion of traditional tools that consultants and campaign strategists have long used.

“What we call trolling is often cloaked in industry jargon … so it’s made [to] sound more respectable than what it actually is,” he said. As such, disinformation actors often see their work more as advertisement and public relations (Ad and PR) rather than political trolling.

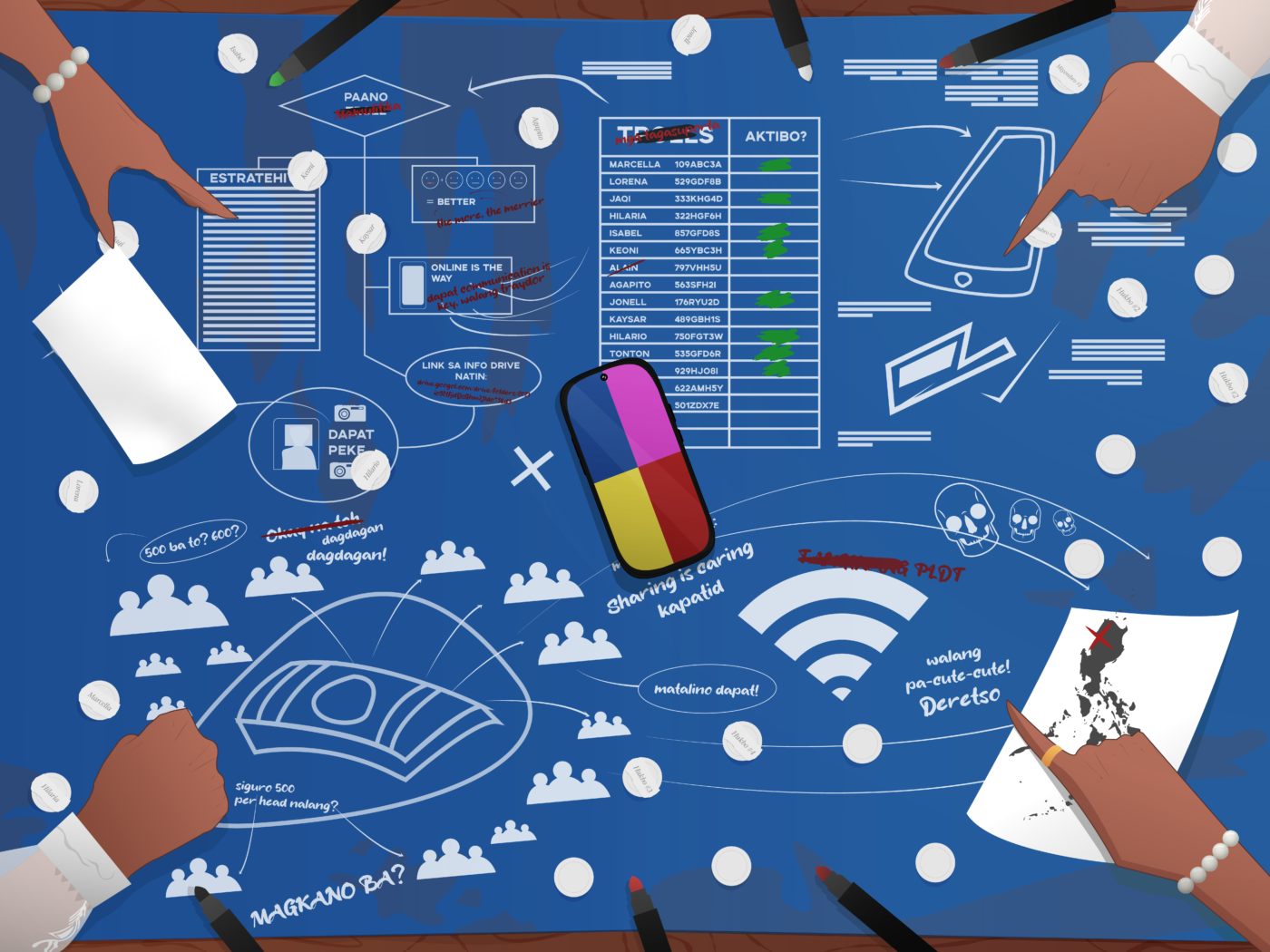

In a report entitled Architects of Networked Disinformation, Ong illustrated various stages of such campaigns. First, political clients set objectives for Ad and PR executives. Strategists then plan how the “click army” would fulfill these goals. Lastly, operators carry out the plan through positive branding, diversionary tactics, negative campaigning, trending, or signal scrambling. All these steps lead to networked narratives that reach grassroots supporters and mainstream media.

Ong noted that merely pursuing trolls alone to combat disinformation is inefficient given their low-level position in a much larger hierarchy. Aside from being smaller targets, operators often see and justify their work as mere gigs while differentiating themselves from “true trolls” such as actual supporters. “No one is a full-time troll. Trolling is a sideline,” Ong remarked.

Furthermore, Ong raised that the news media’s focus on pro-government disinformation risks limiting the issue to the administration. In reality, the industry heavily involves many other political actors to varying degrees.

“Everybody is in on it,” he said, stating how consultants would also work for local-level officials and even between unaligned candidates. As such, discussions on disinformation must not see it simply as a struggle of political agendas, but as a highly organized industry with actors on various levels that are motivated by commercial interests.

Passing the buck

Social media platforms have virtually no monetary incentive to take long-term action against political disinformation since it still brings in engagements, and by extension, revenues. This holds true especially for countries in the Global South.

While platforms have pledged to help maintain the integrity and quality of Philippine political content as the 2022 elections approach, content moderation policies are still implemented on a case-to-case basis and are thus easily exploited by disinformation actors.

“How [these platforms] intervene is very symbolic, very tokenistic,” Gaw said. “For example, very big, tentpole events like COVID-19 or the US election—those things, they act on. But the issues of smaller countries like us […], it’s not a priority for these platforms to act on.”

Gaw further noted that platforms are only likely to take action if faced with legal-economic consequences, such as the ongoing antitrust lawsuit being levied against Facebook by the United States Supreme Court. Nevertheless, she emphasized that social media is merely a platform for the advertising industry that actively bolsters the spread of disinformation.

Ong similarly cautioned against mainstream reportage glossing over how advertising agencies take in political clients, for whom disinformation is then manufactured. As such, tackling this industry should not just concern social media platforms. Ong advocated for a Whole-of-Society approach.

“There needs to be discussions of ethics and self-regulation within the industry […]. Hati-hati [yung campaign work] (the campaign work is split), so no one is fully responsible—but everyone is complicit,” she said.

Both Gaw and Ong stressed that civil initiatives remain equally important and encouraged the youth to take collective action by volunteering in anti-disinformation efforts. According to Gaw, the electorate must remain vigilant against disinformation and educate one’s personal circles.

Meanwhile, Ong called for the creation of safe spaces wherein disgruntled workers and whistleblowers from the advertising industry can share experiences of being made to manufacture disinformation.

Ultimately, accountability for the consequences of disinformation cannot be ascribed to any single sector or platform. Only with a holistic understanding and structural approach to the machinery of political campaigning can there be a pushback against falsehoods online.